At this point it seems basically certain that AI will be a major economic transition. The only real question is how far it goes and how fast. In a previous essay I talked through four scenarios for what the coming few years might look like. In this essay I want to think through how to invest against those scenarios.

Scenario 1 (“damp squib”) is one where AI remains mostly a toy with no meaningful economic impact. The investment consequences are pretty simple – avoid AI investments, or if you’re really brave, short the bubble. However, this scenario seems more unlikely to me by the day.

Scenario 2 (“a typical cycle”) is one where AI is comparable in impact to many other recent tech waves, for example the rise of smartphones. If AI was frozen around current capability levels, I think this is what we’d experience. The investment strategy here is broadly in line with the playbook of the last few decades.

In this post I want to focus on Scenario 3 (“Jetsons world”), where AI is capable of doing median white-collar work. In the next post I’ll cover Scenario 4 (“take-off”), where AI outclasses humans at almost all intellectual work. My personal estimate that things play out along one of these two lines is pretty high, above 50%.

Timelines

Being early is the same as being wrong, so let’s first nail down the timeline. As of December 2024, GPT-4 is still a frontier base model, the anchor for all other AI capabilities. It launched in March 20231, with a training cost estimated at around $100m2. Next generation base models are due shortly. In 2025 we will see the first $1B models, and by 2026/27 the $10B models will land. The latter will use 100 times the training compute of GPT-4, the same as the step from GPT-3 to 4. The major labs are fully committed to the $10b model generation: the chip orders have been placed, the data centers are being constructed, so barring an act of god this card will get turned over3.

What happens after that is harder to say. Nobody really knows how these models will perform qualitatively. Truly transformative AI is one possible outcome, and well informed insiders like Dario Amodei and Leopold Aschenbrenner have written about how that might play out. It would be a world-historic event, but there is no obvious reason it can’t happen on a short timeline.

However, it is also possible the $10B models fall short in one way or another. What happens then? Here, the recent OpenAI o1 model changed the calculus significantly for me, because it makes the capability frontier more elastic4. Prior to o1, if your $10B model was not quite good enough for economically valuable tasks, your only real option was to spend $100B to $1T on the next model generation in the hope that it unlocks the capability. But with o1, you don’t have to buy the whole bundle of capabilities at once, you can selectively improve performance (at least on some task types) by spending more at inference time5. This makes me significantly more bullish that by 2027 we will have models capable of economically useful work across many domains.

This is not a sure thing. If the 2027 models aren’t sufficiently economically productive, the AI bubble likely bursts at that point. It is technically and physically possible to keep scaling base models until at least 20306, but it seems like a very tall order to spend the necessary $100b – $1T, barring an absolutely clear line of sight to economic or geopolitical value7. In the absence of that, we can expect another AI winter, where progress falls back to comparatively slow pace of Moore’s Law and/or waits for a new technical breakthrough8.

So, the game is set. We will see the turn card in 2025, and the river in 2027. It could be a very big pot. Place your bets.

Imagining “Jetsons World”

Before I get to a specific investment case, I want to first imagine in detail what the world might look like a few years out. I’ll focus on scenario 3, where we get AI capable of doing median white-collar work, but not capable of winning a Nobel prize. This would disappoint the AI true believers, but would still be extremely disruptive to most of society.

I’ve previously described how it might play out in general terms here. For this investment analysis, let’s get a bit more specific about how exactly the landscape might look at a nuts-and-bolts level.

Ecosystems of models

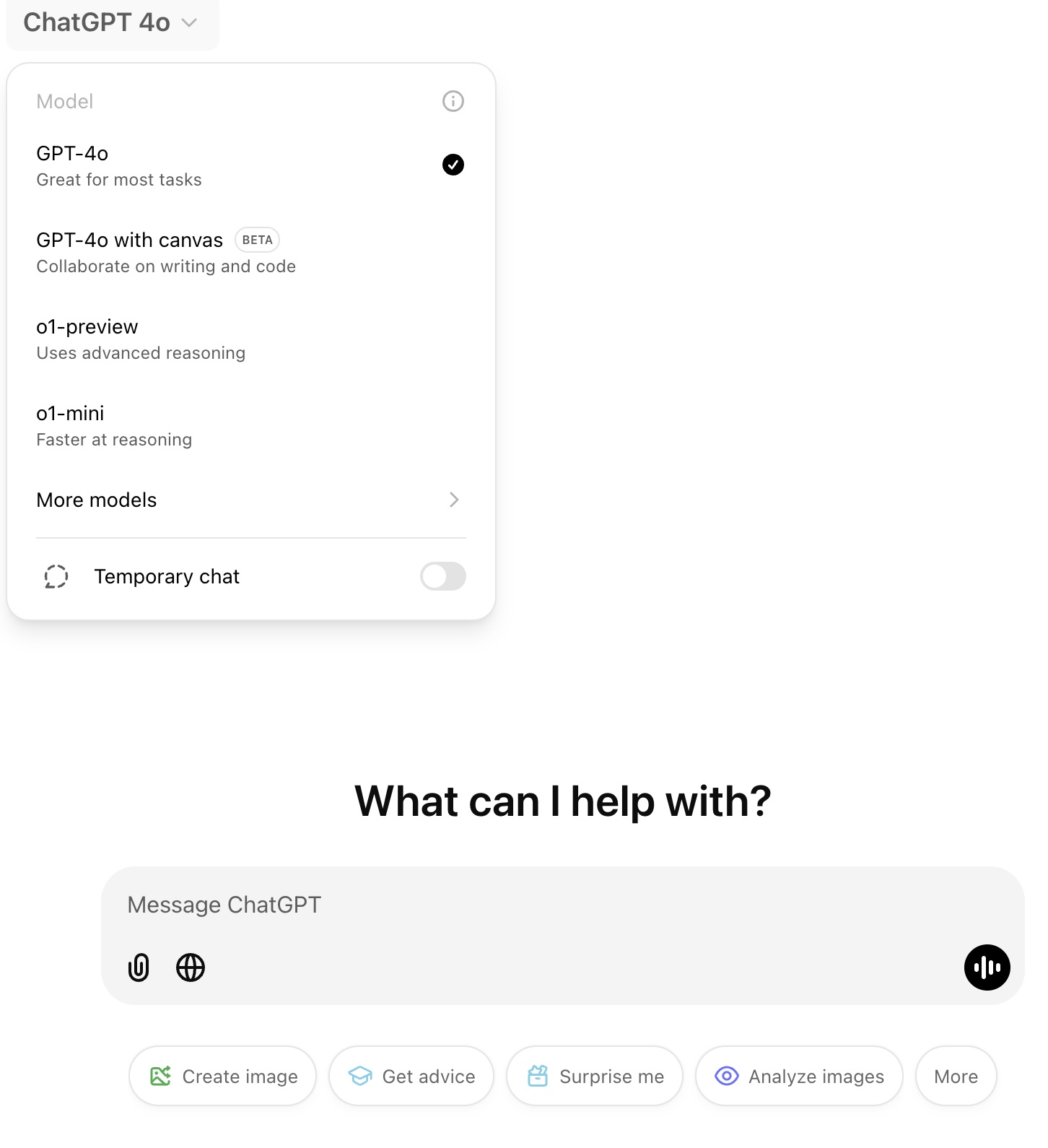

Currently we’re in a very basic state where users manually select models to talk to: OpenAI literally has a drop-down to choose between GPT-4o and o1. In time, there’s going to be a hierarchy of models with dynamic routing between them, largely invisible to the user. A small model running on your local device handles simpler tasks with low latency. More complex queries are routed transparently to one of a larger array of models in the cloud. Inference time compute will vary. Sometimes you might get a response like “This will take 3 minutes and cost $11.50. Continue?”

In high value domains (e.g. medicine, accountancy, biology, etc) there will be specialized models. In corporate settings these are likely selected contractually. For personal use, a general purpose model might sometimes route to them dynamically for a particular query.

In a market with lots of competing model makers, most of the economic power lies with whoever owns the direct relationship with the user. Though if a particular model supplier has very differentiated capabilities, they could also have pricing power.

Control the memories, control the user

For the consumer use case, you can tell companies are not really playing the game for real yet, because everything is stateless. Close the chat and it forgets everything about you. Switching costs are almost zero, and I’ve personally migrated back and forth from ChatGPT to Claude multiple times without it costing me a thought.

This all changes as soon as AI has memory. An executive assistant who gets to know you over years is painful to replace. This is the point when the fight for market share really begins.

We are still some distance from that starting line. OpenAI recently introduced a memory feature, but it’s rudimentary. Google and Microsoft (and to a lesser extent Apple) potentially have a big advantage, since they already store most of our digital lives. Their assistants will have all the right access permissions out of the box, and potentially can arrive pre-personalized using our stored data. However, no company has a complete view of our digital lives, and a really good user-space agent (which would have a universal view, and could come from any company) could be a winning form factor. A lot depends on how feasible that actually is – as with many things, it turns on nitty gritty details. Desktop seems relatively straighforward, but it’s not so obvious it can be done well on mobile9.

Agents

Models are clearly going to start take actions on behalf of the user, starting some time in 2025. This will start in very basic ways, for example: “Give me some options for my trip to London next week (…) Great, please book this flight and that hotel”. Companies that provide the plumbing for taking actions (e.g. Stripe for payments, maybe Booking.com for hotels, etc) should be mostly insulated from disruption and experience the AI revolution as a tailwind.

Within companies, agents can run continuously on a set of tasks. Agents will gradually become capable of taking on ever larger and more complex projects, to the point they become indistinguishable from a typical human remote worker.

Devices

At some point we’ll get a new kind of personal device enabled by AI – though it may take longer to arrive than AGI does. Meta’s Ray-ban glasses is one potential form factor, already showing early traction. Earbuds-with-cameras (Her-style) is another. They won’t replace phones, in the same way phones didn’t replace laptops, but will gradually displace some usage. I think this could be pretty popular – at least as common as say smartwatches.

Autonomous Vehicles

I expect fully autonomous vehicles to be broadly available before 2030. Waymo already does 100k paid rides a week across several US cities10, and the latest Tesla autopilot is getting pretty good. Personally, I would bet on Tesla’s approach in the medium term: they have more and better data, and their approach is cheaper and easier to scale.

Uber handles 160m rides a week, so Waymo’s 100k rides represents about 0.05% of the total rideshare market. How fast could we reach say 10% of US ride hailing journeys being autonomous? Pretty fast. Uber has 7m drivers. Tesla currently sells ~2m cars a year, and the autonomy package is just cameras and compute, so it’s easy to scale up. If Tesla reached L4 tomorrow, even if it’s limited to new vehicles, they could ship enough to handle >10% of US ride hailing within a few quarters, and match the entire Uber fleet within a few years.

Autonomous cargo delivery (e.g. Amazon packages, trucking, etc.) I expect it to lag a bit behind. This is partly because the leading players are all focusing on the taxi use case first, and partly because it also needs a separate solution to unload the cargo from the vehicle. It could easily take 3-5 years from when we hit 10% penetration for autonomous ride hailing to when 10% of Amazon packages are delivered without a driver. Food could be an exception, since the customer is almost always present for the delivery, so could just take the food out themselves. There is also a future in which drones beat AVs, particularly in food delivery, though I think more likely we get a mix of both.

Other Robots

There is no question that modern AI techniques are good enough to make capable robots. The problem is mostly training data, and to a lesser extent the quality and cost of robot hardware11.

A lot of people are currently jumping into robotics, so with many shots on this target some kind of progress seems guaranteed. Self-driving has shown that it can be a long road from demo to useful product, but I don’t think the road will be quite as long for robotics. AI techniques are far better now than they were when AVs started, and error rates don’t have to be as low.

Directionally, I am skeptical of humanoids, and I like the recent work from Pi. But things are early and I’m keeping an open mind.

Complex tasks like working as a chef or construction worker still feel pretty far away. But there’s plenty of economically valuable low hanging fruit, particularly in warehouses. Amazon’s recent purchase of Covariant could be as foundational as their Kiva acquisition was in 2012. I would be surprised if Amazon doesn’t have significantly lower staffing requirements for new warehouses post-2030. However, I’m less sure how quickly retrofitting for existing warehouses will proceed, so it’s possible this is a relatively slow burn.

Lumpy capability profiles

Saying that AI will do “typical white-collar work” is a convenient shorthand, but it’s not quite right. AI is already superhuman at many things, while struggling with others we find trivial. This pattern is going to persist. We may well get AI research mathematicians before we get AI truck drivers.

Can we say which areas will advance fastest? One heuristic is to look at the error rate requirement of an application versus the training data availability, which I’ve written about before. One step further out, as human training data is exhausted, progress becomes about synthetic data and reinforcement learning. OpenAI o1 and AlphaZero are the canonical examples here. The key question becomes “where is the world rich in feedback?”. Some problems lend themselves to “playing against the wall” in one form or another, which can give you huge leaps overnight, others are speed limited by gathering data from the real-world, which could mean much slower progress, though sufficiently good simulations might blur the line a bit.

Labour Force Impact

Over the next 5 or even 10 years, I expect fairly moderate labour force impact, particularly in Western countries.

At the low end, a lot of the work that is currently outsourced to low-cost countries will migrate to AI: call centers, customer support, data entry, book keeping, back office processes, entry level coding, entry-level marketing, etc. Roles in the West that have not yet been outsourced will take a hit.

This could feel particularly transformative for small and mid-sized companies, who lacked the scale or sophistication to outsource work in the past. Moving work to AI should be more approachable, more like training a local employee. Since SMEs are often starved for labour and involve a lot of double-jobbing, I think this will mostly show up as productivity gains rather than job losses. I think we might actually see an SMB renaissance, with net employment gains, rather than job losses.

In the middle, for a typical office job, it seems like we will also get mostly augmentation, not replacement. I am a little surprised to hear myself say this, but I can’t paint a coherent picture of AI entirely replacing a typical office worker on a short timeline12. Every worker may effectively become a manager of a team of AI assistants they can hand tasks off to, but people still seem required as the glue between the AI and other humans, or between AI and the physical world. A few roles will disappear, but the physical, social and political aspects of jobs still need doing by humans. If this was 25% of the job before, it might be 75% of the job now. Even if the AI does the core work 1000x faster, the whole system only speeds up 3x.

This is doubly true for regulated sectors – doctors, lawyers and accountants are all legally required to sign off on things, even if an AI does the work. Even where this doesn’t apply, I suspect things will change slowly. For example, if you’re designing a house, in most countries there’s no legal requirement to hire an architect, and an AI could probably do a good job for you. But I think many people who currently employ an architect would continue to do so – for reassurance, project management, contractor networks, local political nous with permits, etc. Eventually this will change, but it could be a generational change.

In a few domains, such as math, coding and some parts science, we could see more rapid and radical change. The pure information world is AI’s natural habitat, it could potentially go very far very quickly. This is essentially the scenario Dario paints. However, this alone won’t obviously put people out of work.

Overall, in this scenario, gradual change seems the most likely outcome. I am not expecting economic growth to suddenly leap to tens of percent per year. In the long term things will change in a deeper way, but it will take time to percolate.

The truly large efficiency gains only arrive when new AI-native companies start to be born. This is the same pattern we’ve seen in every major technology transition in the past, from the steam engine to the computer to the internet. Designing around the technology from the ground up eventually brings you the radical productivity gains, but it mostly arrives slowly, in a diffusion wave led by new companies, rather than a transformation of legacy ones.

Interest Rates

A number of smart people13 expect that AI will cause interest rates to rise. Getting into the weeds on this would require a separate post, but briefly, I think the closest historical analogues point the other way, and I do not expect this to happen.

Picking Investments

Whew, OK, that was a long preamble. The purpose of this exercise is to pick investments. How does all of that translate into actual market positions? I’ll go theme by theme, and then zoom in on a few particular companies.

LLMs and Agents

This is the big one. Even though change might come gradually, the eventual TAM is the entire white-collar economy.

Some numbers are useful to get a grip on this. Total compensation of all employees in the US is $12.6T/year, and globally it’s around $55T. White collar work is very roughly 70% of the total in the US ($9T/year) and maybe 35-40% worldwide ($20T/year).

For comparison, the combined Magnificent 7 has just shy of $2T of annual revenue at time of writing:

| Company | Annual Revenue |

| Amazon | $620B |

| Apple | $391B |

| Alphabet | $340B |

| Microsoft | $254B |

| Meta | $156B |

| Tesla | $97B |

| Nvidia | $96B |

The eventual TAM is academically interesting, but practically it’s more useful to get some sense of the short-term opportunity. Business Process Outsourcing is one reasonable yardstick that doesn’t require a lot of imagination. AI isn’t an exact substitute for every BPO use case, but it can address many areas BPO can’t, so I feel comfortable with using it as a lower bound. Currently the BPO market is around $300B/year14.

Of the Magnificent 7, Microsoft seems best positioned to address that kind of use case with AI. Microsoft’s revenue is currently $250B/year, growing at 15%. It seems pretty clear that the expanded market opened by AI gives ample headroom to sustain that growth over the medium term.

In terms of investment picks, for the LLM and agent market specifically, my take is:

- Over the medium term, it should show up as a tailwind for the economy as a whole. I expect TFP growth to be at least as good as the 1990’s, and probably better. You likely won’t go too far wrong just owning the S&P 500.

- If I had to pick just one stock for the next five years, I would pick Meta. AI supports their current business in multiple ways, and they have potentially huge new opportunities for SMB and B2C applications of LLMs. For more detailed thoughts on that, I have another post coming up, but I largely agree with this.

- Microsoft also seems very well positioned, and over a slightly longer timeline their opportunity is probably the largest. They are executing well, and they have very deep strength in B2B sales, which fits well for selling worker-augmentation or worker-replacement AI services. Technically they have leading-edge models and a large stake in OpenAI.

- Amazon seems more of a passive beneficiary, but are still likely to do well. They may not have a direct application-level offering, but there are clear tailwinds for AWS, buttressed by their Anthropic relationship and open models like Llama. On a slightly longer timeline, robotics should transform their retail business.

- Google has the third-place cloud, and I think it will remain in third-place for B2B AI services15, short of them pulling out a huge technical lead, which currently looks unlikely. That is not a terrible place to be, except that their search business is more open to disruption than it’s been in a long time. The risk/reward balance from AI seems worse for Google than any other big tech company.

- Things seem broadly neutral for Apple.

- Nvidia and Tesla have extreme valuations, which makes them somewhat less attractive, but they can still go higher in best case outcomes. More on each below.

- Second-tier tech companies like Oracle could do well, since AI products fit with their sales channels.

- There will be plenty of niches for start-ups, but the most important investing question is whether we see LLMs giving rise to a new pillar company (i.e $1T market cap), in the way that the internet gave us Google and Meta. If AI was more capital-light, OpenAI or Anthropic would fit the bill, but their huge capital requirements make them almost de-facto subsidiaries of the big tech at this point. It’s still possible xAI or some future second-generation AI company could emerge with greater independence. We are still very early in the AI transition, and the history of the search engines shows how late dominant players can emerge.

Overall, this is probably not far from a consensus take. However, nothing is ever fully priced in. If AI plays out as expected, there are excellent investment returns to be had by just owning the obvious winners.

Media models

In terms of market cap creation or destruction, media models are a relative side-show to LLMs. AI is a transformative tool for media authoring, and cultural impact seems destined to be large, but most of the engagement will likely accrue to incumbent social networks. Since social networks already seem close to maximally engaging, it’s not clear this really changes anything fundamental16. You may get some investable transformations in other industries like games, movies or music, but those are relatively small specialized parts of the market, and the outcomes are mostly unclear to me. There are probably some good start-up investments here.

Autonomous Vehicles

How much is the AV market worth? The FT does a simple back of the envelope:

Americans drive about 3tn miles a year. If 10 per cent of that travel shifts to robotaxis, with passengers paying the roughly $1 a mile it costs to ride a city bus, there could be $300bn of revenue. At a 50 per cent operating margin, valued on the 15 times multiple of operating profit that carmakers such as Ford command, the result is more than $2tn of value.

This seems reasonable on a five year time horizon. In the longer term, full global adoption (5X) plus increasing trip fraction (10x) could give you 10-30x more, even with falling prices per mile. So $2T of revenue and $20T+ of market cap creation is plausible.

The problem is that existing AV leaders are highly valued already: Tesla currently has $1.2T market cap, of which probably $900B is hope value. Waymo is part of Alphabet’s $2T market cap. The potential market is big enough that you could still do fine here, but neither gives you an ideal setup.

One option is to short all the legacy car makers, who I expect will do very badly in the transition (on top of their existing struggles with the simpler problem of EVs and China), but shorting is a difficult game.

My current favorite way to bet on AVs is Doordash:

- Food delivery benefits no matter who wins the AV race, or even if alternative modalities such as drones win.

- AVs seem basically plug-and-play for food delivery. The customer is usually present, so you don’t have the last meter problem which may delay other package delivery applications.

- Ride hailing seems destined to become more competitive. There’s nothing to stop a new entrant like Tesla competing with Uber, which should compress margins for robotaxis. But Doordash’s moat seems more likely to remain intact, and margins should improve. The restaurant network is slow to build and requires physical deployment of order printers. Doordash market share is 65% and growing, it’s not easy to replicate that.

- Doordash’s current market cap is 63B, with fairly thin economics, so the stock should be a relatively sensitive bet. I did a simple analysis and come up with 3-5x increase in enterprise value in an autonomy scenario.

- However, this could take 10 years to fully play out. 4x over 10 years is 15% IRR, which is not necessarily better than owning Microsoft.

Other Robotics

The addressable market for robotics is ultimately all blue-collar work, which is about $25T/year at time of writing. There are also huge new markets in home robotics. However, this could take a long time to play out. Robotics feels at least five years behind LLMs, even allowing for some catch-up.

I don’t see any particularly obvious ways to get good exposure to robotics via public companies, so this may be an area mostly for start-up bets. It’s unclear if the current generation of companies will make it, or if they’re still too early.

In the long run, I think Apple is the natural owner of home robotics. Even if they remain asleep at the wheel for a decade, their brand and hardware expertise seem like natural fits. This is still far off, but if Apple stock were to fall out of favour due to having no obvious successor product to the iPhone, I’d consider picking some up.

Tesla does have a reasonable position with Optimus: there is genuine synergy with their AV work and their manufacturing expertise. They can also dogfood it in their own operations. However, many things remain unclear: what market is addressable on what timeline, are humanoids the right bet, etc?

On the start-up side, there is a big recent wave of companies with top-tier talent and large funding rounds. This is high risk even by start-up standards, and it may still be too early. But it’s at least plausibly the right time, the market seems big enough for many winners, and there are high barriers to entry that should make these companies more durable than LLM wrappers. If I had to pick where AI would birth a new pillar company that goes from being worth nothing to $1T, robotics seems like the place.

Semiconductor companies

Semiconductors are not my specialty, so take this next part with a pinch of salt. That said, I expect things to barbell in the medium term. Right now Nvidia is king, and they will likely hold that crown for a few more years, but I don’t think their monopoly can endure. Longer term the players below them (physical fabs) and above them (user-facing application companies) seem to have the more durable moats.

Nvidia’s customers want them to have competition, and are actively working to make it happen. All the hyperscalers have custom AI chip projects, and Google’s TPUs are quite mature. OpenAI has a chip project, and there are many startups taking swings. AMD also has a credible offering. Right now CUDA lock-in is enough to mostly insulate Nvidia from all of this, particularly for training and research workloads. However, porting inference workloads is more feasible, and inference is probably at least half the market17.

If we get good AI automation of coding and chip design, that could push things further and faster. Nvidia is essentially a ‘software’ company, and if AI drives the cost of software towards zero, its advantages could evaporate18. Moving away from CUDA becomes a non-issue if AI does it for you.

Foundries such as TSMC have a much more secure position, at least modulo geopolitical risk in Taiwan. Samsung, Intel and new entrant Rapidus all have a mountain to climb, but Intel could plausibly be back in the game around 2027, assuming they can secure the very large amount of capital required19. AI is still a relatively small fraction of volume for foundries though, so you’re not getting high beta here in the short term.

Biology

AI is clearly having a huge impact in biology, but technically I am even less close to this than semiconductors, and have no idea how to bet on it. Alphabet has a strong effort (Isomorphic Labs), but it’s far too small to matter to the overall stock. Start-ups might be the way here? For public exposure, perhaps it’s a tailwind to existing big pharma?

Insulated Beta

If you want an investment that just rises with (AI-juiced) GDP, but is mostly insulated from disruption, I think just owning a broad index will do fine. Possibly a few sectors get more of the tailwind and less of the disruption (e.g. payments companies like Adyen and Stripe seem plausible), but I’m not confident enough in that to justify a lot of concentration.

Obvious Losers

Who might be worth shorting? Legacy car markers seem very vulnerable. Europe in general seems weakly positioned. BPO companies like Infosys are another candidate, though it’s not impossible that goes the other way. Some large SaaS companies like Salesforce could find themselves suddenly less necessary, though they could also navigate it. Overall it seems easier just to back the winners.

Second-order consequences

There will be lots of second order consequences. Generally, I’d avoid betting on this. Prediction is hard, chains of probabilities quickly become small. There is plenty to do just focusing on the first order winners.

However, some of them seem so inevitable that they may be worth thinking more about. The most obvious is urban land value changes driven by autonomous vehicles, particularly the vast repurposing of retail parking space in the US. Exactly how this plays out is hard to say, it’s not clear how that land can be best redeveloped.

Where is the bubble?

Almost every major tech transition produces a bubble, and AI will surely see an almighty one. I don’t think that bubble has really begun yet. Which stocks are going to the moon, fundamentals be damned?

Frontier labs like OpenAI, Anthropic and xAI would be the obvious candidates, but as long as they remain private, the animal spirits must go elsewhere. Nvidia is the next most obvious place, and it’s still in rational territory. Tesla also fits the bill to some extent. But I think true bubble-type behaviour will probably require smaller stocks with a purer AI focus. Maybe some 2025 or 2026 IPOs will be candidates.

Conclusions

This scenario manages to be both radical and a little bit boring. More extreme outcomes are possible, and I will analyse that in the next post.

Footnotes

- And finished training in August 2022 ↩︎

- These numbers are only correct to order-of-magnitude. It depends exactly what you count (e.g. the cost of the cluster vs the cost of the training run). Dylan Patel puts the cost of GPT-4 as high as $500m. ↩︎

- The compute won’t necessarily all go towards pure Chinchilla scaling of base models. Some of it will go towards synthetic data and RL (e.g. for reasoning models like o1). So this isn’t quite an apples to apples comparison to past model generations. ↩︎

- I’m using o1 as the main example for simplicity, but I’m also very bullish on test-time training, which promises something similar via an entirely different mechanism. With multiple shots on target, I expect inference time scaling to work out one way or another. Current AIs have response times of ~1 second, but response times of a week or more would be perfectly acceptable for many tasks. There are 600k seconds in a week, so that gives about 6 OOMs for inference-time scaling laws to operate, which is a lot. ↩︎

- Specifically, tasks where it’s feasible to collect the reward signals needed to drive RL. I think enough economically useful work lies in this bucket that the general argument in this section holds up, but it’s definitely not the case that o1 allows you to improve at arbitrary tasks. For the bear case here, I recommend this essay by Aidan McLaughlin. ↩︎

- This Epoch report makes the case. Some of the major players have also started to build optionality around data center sites for the $100B model generation. ↩︎

- Governments are clearly aware of the strategic nature of AI, so depending on the capability set this may happen. There is already high-level talk in the US of an AI Manhattan Project. ↩︎

- This would be a slow climb, both because Moore’s Law is now very long in the tooth, and because a lot of the application-specific juice has been squeezed in the last few years. Progress could still arrive in the form of an algorithmic breakthrough, or a radically new chip design (for example mortal computers ala Extropic). ↩︎

- You can definitely make something work on Android (e.g like this), but it’s clunky and frowned upon. On iOS things are even more limited, a general solution seems unsupported. Though someone else might know tricks I don’t. ↩︎

- Though probably still with remote safety drivers. ↩︎

- I think the lack of decent touch sensing is also a problem. ↩︎

- At least without full-blown superintelligence, which is excluded in this scenario. ↩︎

- See Tyler Cowen here, Leopold Aschenbrenner here, and Zvi Mowshowitz here. ↩︎

- That’s roughly 1.5% of global white-collar wages, but it’s concentrated in certain countries. BPO makes up around 7 – 10% of GDP for India and the Philippines. There could be some shocks there. ↩︎

- Or maybe worse. They are currently 4th. ↩︎

- There will be winners and losers among creators, much like you see with the rise of any new format. It’s possible this also results in the birth to new networks, but Meta has been effective as a fast-follower on every new trend thus far, so it seems unlikely they would miss out. ↩︎

- Though possibly this workload gets at least partly absorbed by old training clusters? ↩︎

- Tamay Besiroglu makes the opposing case that while current-quality software prices could go to zero, software complexity could expand so much that prices remain unchanged or go up. This is certainly possible, though for the case of Nvidia my own guess is that it won’t apply. ↩︎

- Which is very hard, despite huge subsidies. ↩︎